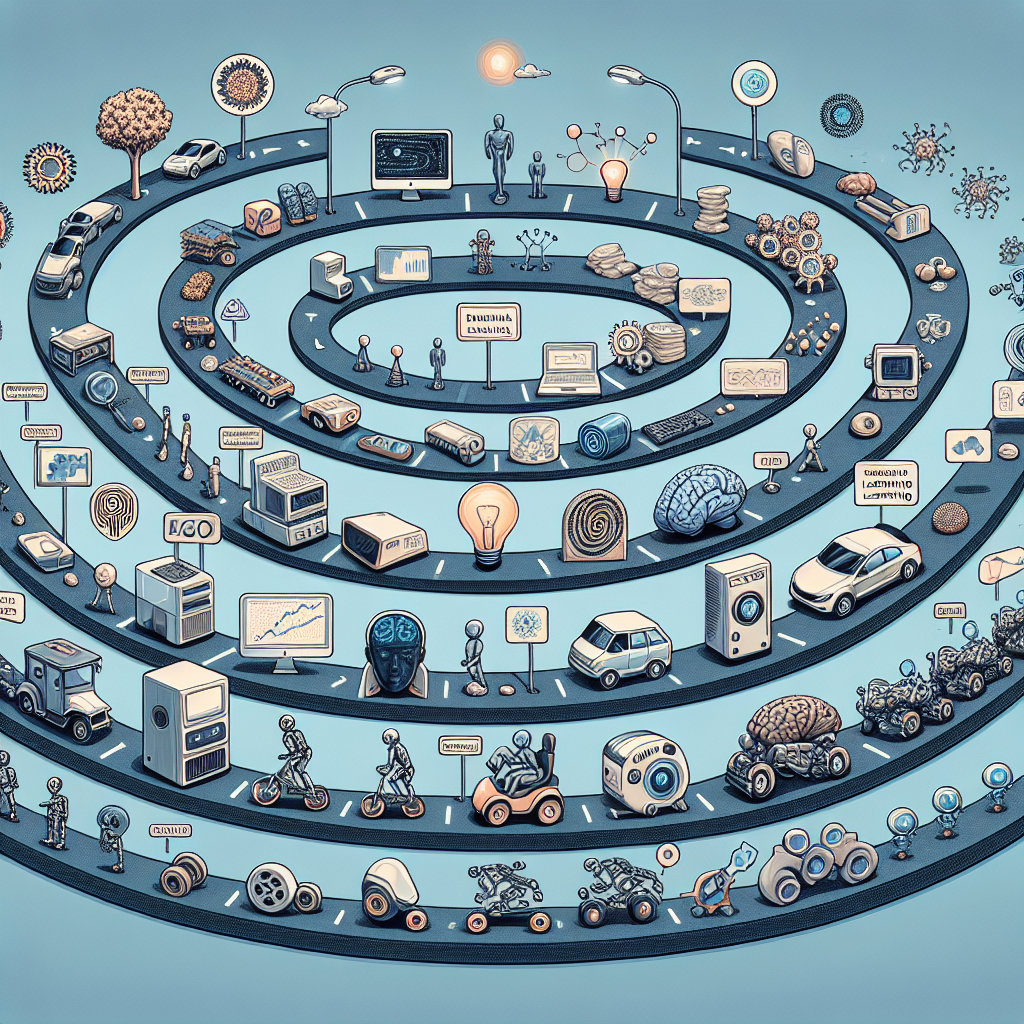

The Road to AGI: A Timeline of Milestones in the Development of Artificial General Intelligence

Artificial General Intelligence (AGI) refers to the ability of a machine to perform any intellectual task that a human can do. While we are currently in the era of Artificial Narrow Intelligence (ANI), where machines are able to perform specific tasks better than humans, the ultimate goal is to achieve AGI, where machines can surpass human intelligence in all areas.

The development of AGI has been a long and challenging journey, with researchers and scientists working tirelessly to push the boundaries of artificial intelligence. In this article, we will explore the key milestones in the development of AGI, from its inception to the present day, and discuss the challenges and opportunities that lie ahead.

1950s-1960s: The Birth of Artificial Intelligence

The concept of artificial intelligence was first introduced in the 1950s, with the famous Turing Test proposed by Alan Turing in 1950. The Turing Test was designed to measure a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human. This laid the foundation for the field of artificial intelligence and sparked significant interest and research in the area.

During the 1960s, researchers began to develop early AI systems, such as the General Problem Solver (GPS) by Herbert Simon and Allen Newell, and the Logic Theorist by John McCarthy. These early systems were able to solve specific problems and demonstrate some level of reasoning and problem-solving capabilities.

1970s-1980s: Expert Systems and Neural Networks

In the 1970s and 1980s, the focus of AI research shifted towards expert systems, which were designed to mimic the decision-making capabilities of human experts in specific domains. Expert systems were widely used in various industries, such as healthcare, finance, and engineering, and marked a significant advancement in AI technology.

During this time, researchers also began to explore neural networks as a way to model the human brain and mimic its learning capabilities. Neural networks are a set of algorithms designed to recognize patterns and learn from data, and have since become a key component in the development of AI systems.

1990s-2000s: Machine Learning and Deep Learning

The 1990s and 2000s saw a rapid advancement in machine learning algorithms, particularly in the field of neural networks. Researchers developed more sophisticated algorithms, such as deep learning, which allowed machines to learn from large amounts of data and make decisions without explicit programming.

One of the key milestones during this time was the development of convolutional neural networks (CNNs) and recurrent neural networks (RNNs), which have revolutionized the field of computer vision and natural language processing, respectively. These advancements paved the way for the development of AI systems that could perform complex tasks with a high degree of accuracy.

2010s-Present: Breakthroughs in AI and the Rise of AGI

In recent years, there have been several breakthroughs in AI technology that have brought us closer to achieving AGI. One of the most notable breakthroughs was the development of AlphaGo by DeepMind in 2016, which defeated the world champion Go player Lee Sedol. This marked a significant milestone in AI research, demonstrating the ability of machines to outperform humans in complex strategic games.

Since then, researchers have made further advancements in AI technology, particularly in the areas of reinforcement learning, transfer learning, and unsupervised learning. These advancements have enabled AI systems to learn from limited data, generalize across different tasks, and adapt to new environments, bringing us closer to achieving AGI.

Challenges and Opportunities in the Development of AGI

While the road to AGI is paved with groundbreaking advancements and achievements, there are still several challenges that researchers must overcome to achieve true artificial general intelligence. Some of the key challenges include:

1. Scalability: Current AI systems are limited in their ability to scale and generalize across different tasks and domains. Researchers must develop algorithms that can learn from diverse data sources and adapt to new environments.

2. Interpretability: AI systems often operate as black boxes, making it difficult to interpret their decisions and understand their reasoning. Researchers must develop explainable AI models that can provide insights into how decisions are made.

3. Ethics and Bias: AI systems can inherit biases from the data they are trained on, leading to unfair or discriminatory outcomes. Researchers must address issues of bias and fairness in AI systems to ensure they are used ethically and responsibly.

4. Safety and Control: As AI systems become more advanced, there is a growing concern about their potential to cause harm or act unpredictably. Researchers must develop robust safety mechanisms and control measures to ensure AI systems are safe and trustworthy.

FAQs about AGI

Q: How close are we to achieving AGI?

A: While significant progress has been made in AI research, we are still far from achieving true artificial general intelligence. Researchers estimate that it may take several decades or even centuries to reach AGI, given the complexity and challenges involved in replicating human intelligence.

Q: Will AGI replace human workers?

A: While AI has the potential to automate certain tasks and jobs, it is unlikely that AGI will completely replace human workers. Instead, AGI is more likely to augment human abilities and enable us to solve complex problems and make better decisions.

Q: What are the ethical implications of AGI?

A: The development of AGI raises several ethical concerns, such as privacy, security, bias, and accountability. Researchers and policymakers must address these issues to ensure that AGI is developed and deployed in a responsible and ethical manner.

Q: How can I get involved in AGI research?

A: If you are interested in AGI research, there are several ways to get involved, such as pursuing a degree in artificial intelligence or machine learning, attending conferences and workshops, and collaborating with researchers in the field. By staying informed and engaged, you can contribute to the advancement of AGI technology.

In conclusion, the road to AGI is a long and challenging journey, but with continued research and innovation, we are inching closer to achieving true artificial general intelligence. While there are still many challenges and uncertainties ahead, the opportunities and potential benefits of AGI are vast and exciting. By addressing key challenges and ethical considerations, we can ensure that AGI is developed and deployed in a responsible and beneficial manner for society.