Artificial Intelligence (AI) has rapidly become one of the most transformative technologies of our time. From self-driving cars to medical diagnostics, AI has the potential to revolutionize countless industries and improve the way we live and work. However, with this great power comes great responsibility. The ethical implications of AI development are vast and complex, requiring careful consideration and thoughtful action to ensure that AI is used in a way that benefits society as a whole.

One of the key ethical considerations in AI development is the potential for bias and discrimination. AI systems are only as good as the data they are trained on, and if that data is biased or incomplete, it can lead to discriminatory outcomes. For example, AI algorithms used in hiring processes have been found to discriminate against certain groups based on factors such as race or gender. This raises important questions about how to ensure that AI systems are fair and unbiased, and how to address and correct biases when they are identified.

Another ethical concern is the impact of AI on jobs and the economy. As AI technology continues to advance, there is a growing fear that automation will lead to widespread job loss, particularly in industries that rely heavily on manual labor. This raises questions about how to ensure that the benefits of AI are shared equitably, and how to support workers who may be displaced by AI technology.

Privacy is also a major ethical consideration in AI development. AI systems often rely on vast amounts of personal data to function, raising concerns about how that data is collected, stored, and used. There is a risk that AI systems could be used to invade individuals’ privacy or manipulate them in ways that are harmful or exploitative. This raises important questions about how to protect individuals’ privacy rights while still harnessing the power of AI for the greater good.

In addition to these ethical considerations, there are also broader questions about the role of AI in society and how it should be governed. Who should be responsible for regulating AI development and ensuring that it is used responsibly? What rights and protections should be afforded to individuals affected by AI systems? These are complex and challenging questions that require input from a wide range of stakeholders, including policymakers, technologists, ethicists, and the general public.

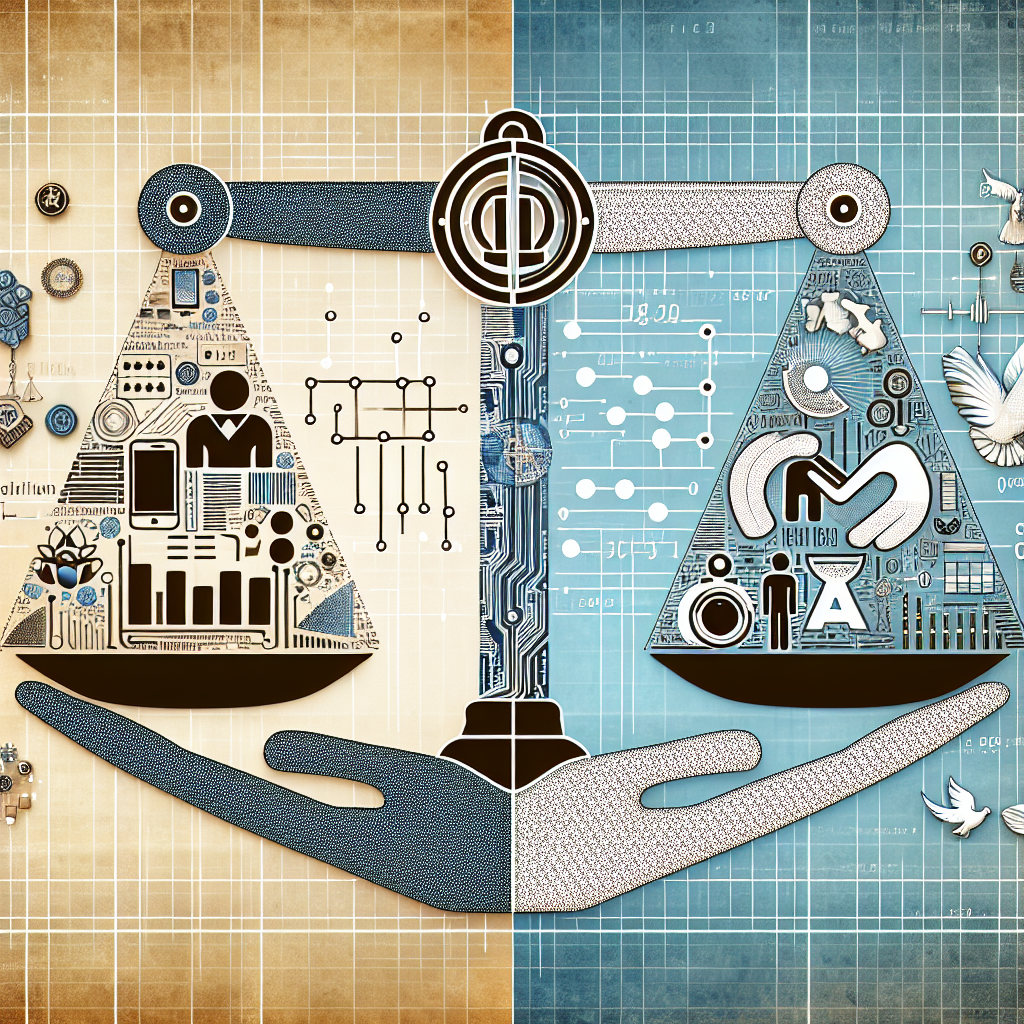

Balancing innovation and responsibility in AI development is no easy task, but it is essential if we are to harness the full potential of this transformative technology while minimizing its risks. It requires a commitment to transparency, accountability, and ethical decision-making at every stage of the AI development process.

FAQs:

Q: What are some examples of bias in AI systems?

A: One example of bias in AI systems is facial recognition technology, which has been found to be less accurate for individuals with darker skin tones. This is because the data used to train the facial recognition algorithms may be biased towards lighter skin tones, leading to discriminatory outcomes for individuals with darker skin.

Q: How can bias in AI systems be addressed?

A: Bias in AI systems can be addressed through a variety of approaches, including improving the diversity and representativeness of the data used to train AI algorithms, implementing bias detection and correction tools, and ensuring that AI systems are regularly audited for bias and discrimination.

Q: What are some ways to protect individuals’ privacy in AI development?

A: To protect individuals’ privacy in AI development, it is important to implement robust data protection measures, such as encryption, anonymization, and data minimization. It is also important to obtain individuals’ consent before collecting their personal data and to be transparent about how that data will be used.

Q: How can we ensure that the benefits of AI are shared equitably?

A: Ensuring that the benefits of AI are shared equitably requires a commitment to social justice and economic equality. This may involve implementing policies such as universal basic income, job retraining programs, and progressive taxation to ensure that the gains from AI technology are distributed fairly among all members of society.