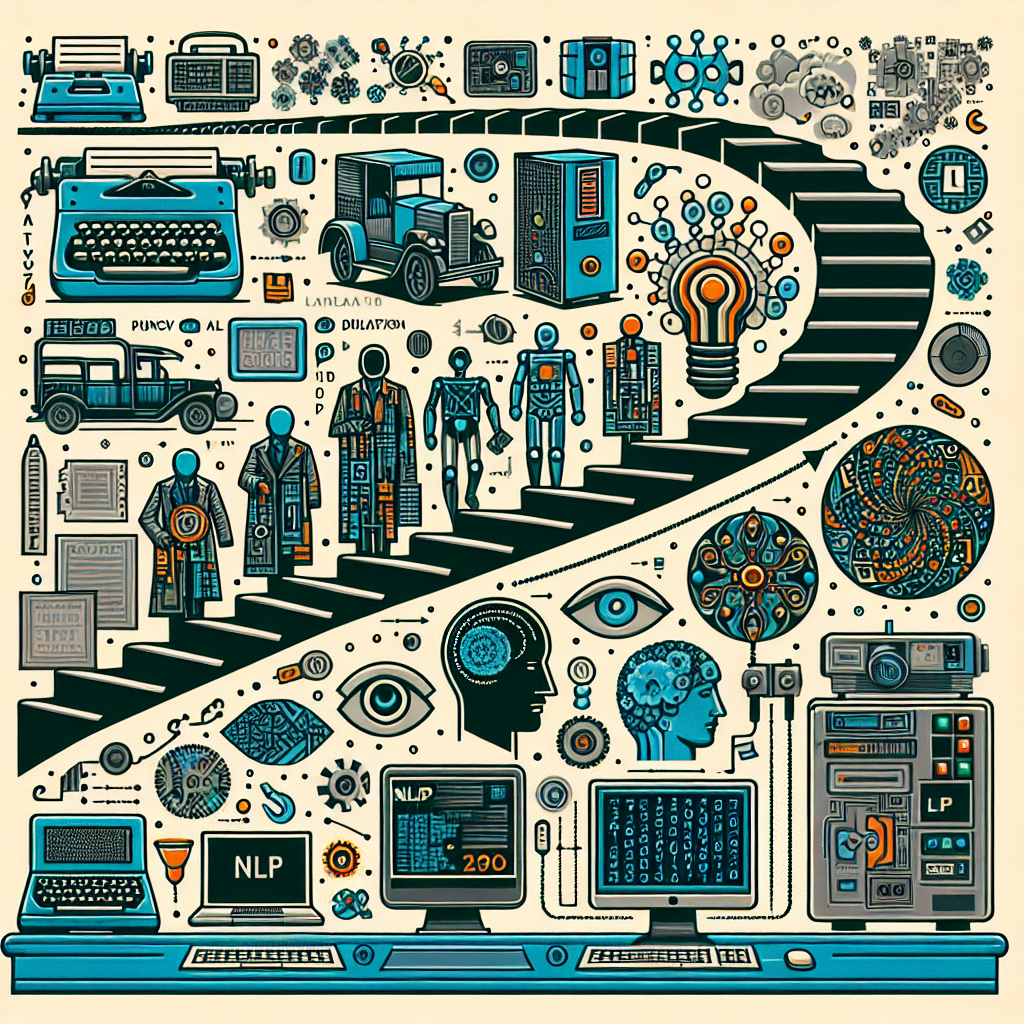

Natural Language Processing (NLP) is a field of artificial intelligence that focuses on the interaction between computers and humans through natural language. Over the years, NLP has evolved significantly, from simple rule-based systems to sophisticated deep learning models. In this article, we will explore the evolution of NLP and the key milestones that have shaped the field.

1. Early Developments

The roots of NLP can be traced back to the 1950s when Alan Turing proposed the Turing Test as a way to evaluate a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human. In the following decades, researchers began to explore the potential of computers to process and understand natural language. One of the early milestones in NLP was the development of the ELIZA chatbot in the 1960s, which could engage in a conversation using simple pattern-matching rules.

2. Rule-based Systems

In the 1970s and 1980s, NLP systems relied heavily on rule-based approaches, where linguists and computer scientists manually crafted rules to parse and understand natural language. These systems were limited in their ability to handle the complexities and nuances of human language, as they struggled with ambiguity, context, and variability. Despite these limitations, rule-based systems laid the foundation for later developments in NLP.

3. Statistical Approaches

In the 1990s, researchers began to explore statistical approaches to NLP, using machine learning algorithms to analyze and process natural language data. This shift allowed for more scalable and robust NLP systems, as statistical models could learn from large amounts of text data and adapt to different linguistic patterns. One of the key breakthroughs during this period was the development of the Hidden Markov Model (HMM) for speech recognition, which paved the way for more advanced NLP applications.

4. Neural Networks and Deep Learning

In recent years, the rise of neural networks and deep learning has revolutionized the field of NLP. Deep learning models, such as recurrent neural networks (RNNs) and transformers, have shown remarkable performance in tasks such as machine translation, sentiment analysis, and question answering. These models can learn complex patterns and relationships in text data, enabling them to generate more accurate and contextually relevant responses.

5. Pretrained Language Models

One of the most significant advancements in NLP in recent years has been the development of pretrained language models, such as BERT (Bidirectional Encoder Representations from Transformers) and GPT-3 (Generative Pre-trained Transformer 3). These models are trained on vast amounts of text data and can be fine-tuned for specific tasks, making them highly versatile and effective in a wide range of NLP applications. Pretrained language models have set a new standard for natural language understanding and generation.

6. Applications of NLP

NLP has found applications in various domains, including healthcare, finance, customer service, and education. Some common use cases of NLP include sentiment analysis, chatbots, language translation, text summarization, and information extraction. NLP technologies are also being used to analyze and extract insights from unstructured text data, enabling organizations to make data-driven decisions and improve customer experiences.

7. Challenges and Future Directions

Despite the significant progress in NLP, several challenges remain, such as bias in language models, understanding context and sarcasm, and handling multilingual and code-mixed text. Researchers are actively working on addressing these challenges and pushing the boundaries of NLP further. The future of NLP is likely to involve more advanced models that can understand and generate human-like text, as well as innovations in areas such as multimodal NLP and conversational AI.

FAQs

Q: What are the key components of NLP?

A: The key components of NLP include text preprocessing, tokenization, part-of-speech tagging, named entity recognition, syntactic parsing, semantic analysis, and text generation.

Q: How does NLP differ from natural language understanding (NLU)?

A: NLP refers to the broader field of processing and analyzing natural language, while NLU focuses on understanding the meaning and intent behind the text. NLU is a subset of NLP that involves deeper semantic analysis and inference.

Q: What are some common NLP applications?

A: Some common NLP applications include sentiment analysis, chatbots, machine translation, text summarization, information extraction, and question answering.

Q: What are the ethical considerations in NLP?

A: Ethical considerations in NLP include bias in language models, privacy concerns in text data, and the responsible use of NLP technologies. Researchers and practitioners are actively working on addressing these ethical challenges in NLP.

In conclusion, the evolution of NLP has been marked by significant advancements in technology and research, from rule-based systems to deep learning models. NLP has transformed the way we interact with computers and machines through natural language, enabling a wide range of applications in various domains. As researchers continue to push the boundaries of NLP, we can expect further innovations and breakthroughs that will shape the future of artificial intelligence and human-machine communication.