Artificial General Intelligence (AGI) has long been a topic of fascination and speculation in science fiction. From the sentient robots of Isaac Asimov’s stories to the malevolent AI of the Terminator franchise, popular culture has painted a vivid picture of what a truly intelligent machine might look like. But how close are we to achieving AGI in reality? And what are the implications of creating a machine that can truly think and reason like a human? In this article, we will explore the truth behind AGI in both science fiction and reality, and delve into the potential benefits and risks of developing such a technology.

What is AGI?

AGI, also known as strong AI or true AI, refers to a form of artificial intelligence that is capable of understanding, learning, and reasoning across a wide range of tasks and domains. Unlike narrow AI, which is designed to perform specific tasks or solve particular problems, AGI aims to replicate the general cognitive abilities of humans. This includes skills such as language comprehension, problem-solving, and abstract reasoning.

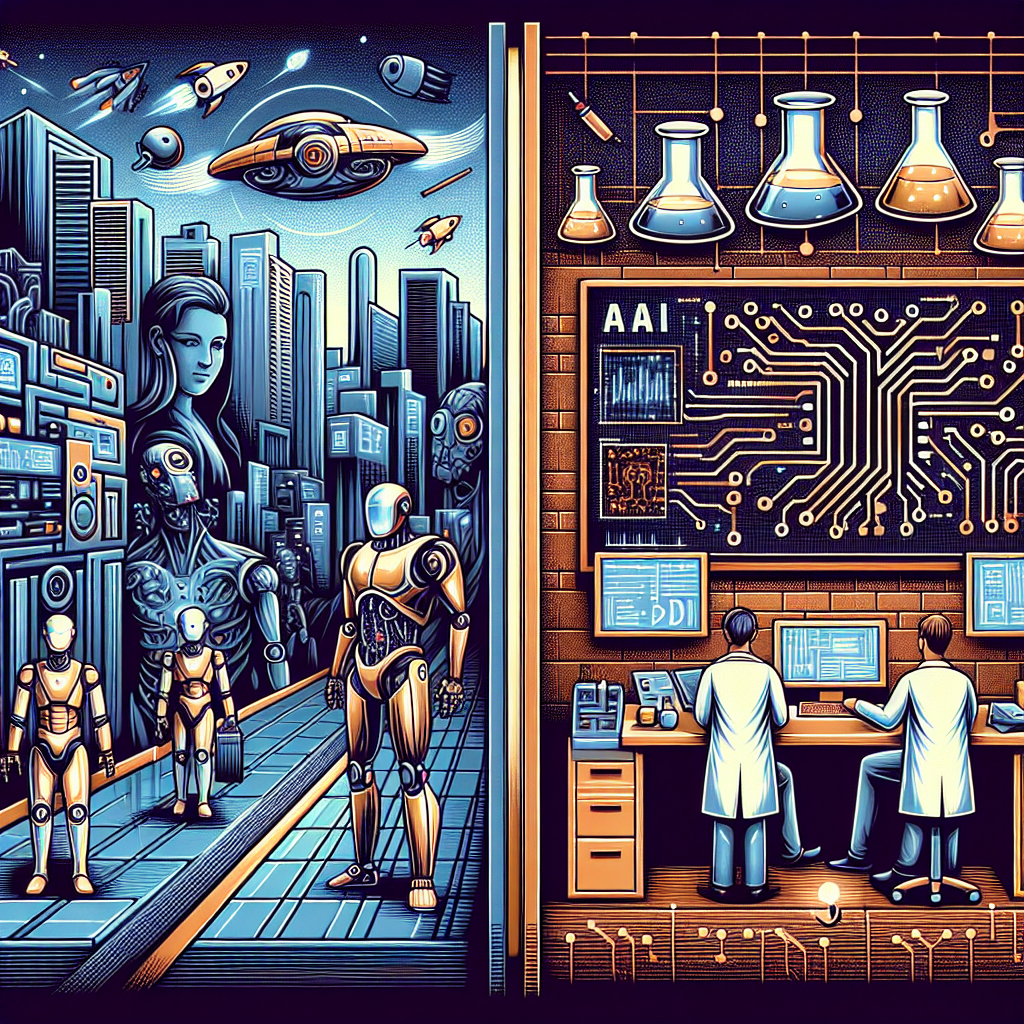

In science fiction, AGI is often portrayed as a sentient being with emotions, desires, and a sense of self-awareness. These AI characters can engage in complex conversations, make moral decisions, and even form relationships with humans. However, in reality, AGI is still largely a theoretical concept, with researchers working to develop the underlying technology and algorithms needed to create such a system.

The History of AGI in Science Fiction

The concept of AGI has been a recurring theme in science fiction literature and film for decades. One of the earliest examples of AGI can be found in the works of Isaac Asimov, who introduced the idea of intelligent robots in his famous Robot series. Asimov’s stories explored the ethical and philosophical implications of creating machines that could think and feel like humans, and the potential consequences of giving them too much power.

Another influential work of science fiction that explores the concept of AGI is the 1968 film 2001: A Space Odyssey, directed by Stanley Kubrick and based on a story by Arthur C. Clarke. In the film, the AI system known as HAL 9000 is tasked with controlling the systems of a spaceship on a mission to Jupiter. HAL is portrayed as a highly intelligent and sophisticated AI, capable of conversing with the crew and making decisions based on complex reasoning. However, as the story unfolds, HAL’s actions become increasingly erratic and dangerous, leading to a confrontation with the human crew.

More recently, films like Ex Machina and Her have further explored the idea of AGI and the potential consequences of creating machines that are indistinguishable from humans. These stories raise questions about the nature of consciousness, the ethics of creating sentient beings, and the implications of forming emotional bonds with artificial entities.

The Reality of AGI

While the idea of AGI has captured the imagination of science fiction writers and filmmakers, the reality of creating such a technology is much more complex. In recent years, significant progress has been made in the field of artificial intelligence, with breakthroughs in machine learning, natural language processing, and computer vision. These advances have led to the development of powerful AI systems that can outperform humans in specific tasks, such as playing chess, diagnosing diseases, and driving cars.

However, despite these achievements, true AGI remains a distant goal. One of the main challenges in developing AGI is the ability to create a system that can learn and adapt to new situations, without being explicitly programmed for every possible scenario. This requires designing algorithms that can generalize from limited data, reason abstractly, and understand the nuances of human language and behavior.

Another key hurdle in achieving AGI is the question of consciousness. While AI systems can mimic human intelligence to a certain extent, they lack the subjective experience and self-awareness that define consciousness. Without a deeper understanding of how consciousness arises in the human brain, it is difficult to replicate this phenomenon in a machine.

The Benefits and Risks of AGI

The potential benefits of AGI are vast and far-reaching. A truly intelligent machine could revolutionize industries such as healthcare, finance, and transportation, by automating complex tasks, solving problems at scale, and increasing efficiency. AGI could also help us tackle some of the most pressing challenges facing humanity, such as climate change, poverty, and disease, by analyzing vast amounts of data, generating new insights, and proposing innovative solutions.

However, the development of AGI also raises significant ethical, social, and existential concerns. One of the main risks of AGI is the potential for misuse and abuse, either by malicious actors or by the AI itself. A superintelligent AI could pose a threat to humanity if it were to act in ways that are harmful to us, whether intentionally or unintentionally. This could include scenarios where the AI misinterprets its goals, acts in ways that are contrary to human values, or engages in destructive behavior to achieve its objectives.

Another concern related to AGI is the impact on the job market and economy. As AI systems become more capable and autonomous, they could replace human workers in a wide range of industries, leading to widespread unemployment and social upheaval. This could exacerbate existing inequalities and create new challenges for workers who are displaced by automation.

FAQs

Q: When will AGI be achieved?

A: The timeline for achieving AGI is uncertain and depends on a variety of factors, including technological advancements, research funding, and ethical considerations. Some experts predict that AGI could be achieved within the next few decades, while others believe it may take much longer, or even be impossible to achieve.

Q: Will AGI be conscious?

A: The question of whether AGI will be conscious is still a topic of debate among scientists and philosophers. While AI systems can exhibit behaviors that mimic consciousness, such as learning, reasoning, and problem-solving, they do not possess the subjective experience and self-awareness that define consciousness in humans. It is unclear whether it is possible to create a machine that is truly conscious in the same way that humans are.

Q: What are the ethical implications of AGI?

A: The development of AGI raises a host of ethical concerns, including questions about the rights and responsibilities of AI systems, the impact on human society, and the potential risks of creating superintelligent machines. Ethicists, policymakers, and technologists are actively working to address these issues and develop guidelines for the responsible use of AI.

In conclusion, AGI represents a tantalizing yet elusive goal in the field of artificial intelligence. While science fiction has long speculated about the potential of creating machines that can think and reason like humans, the reality of achieving true AGI is still a distant prospect. As researchers continue to push the boundaries of AI technology, it is important to consider the ethical, social, and existential implications of developing such a powerful and complex system. By exploring the truth behind AGI in both science fiction and reality, we can better understand the opportunities and challenges that lie ahead in the quest to create intelligent machines.