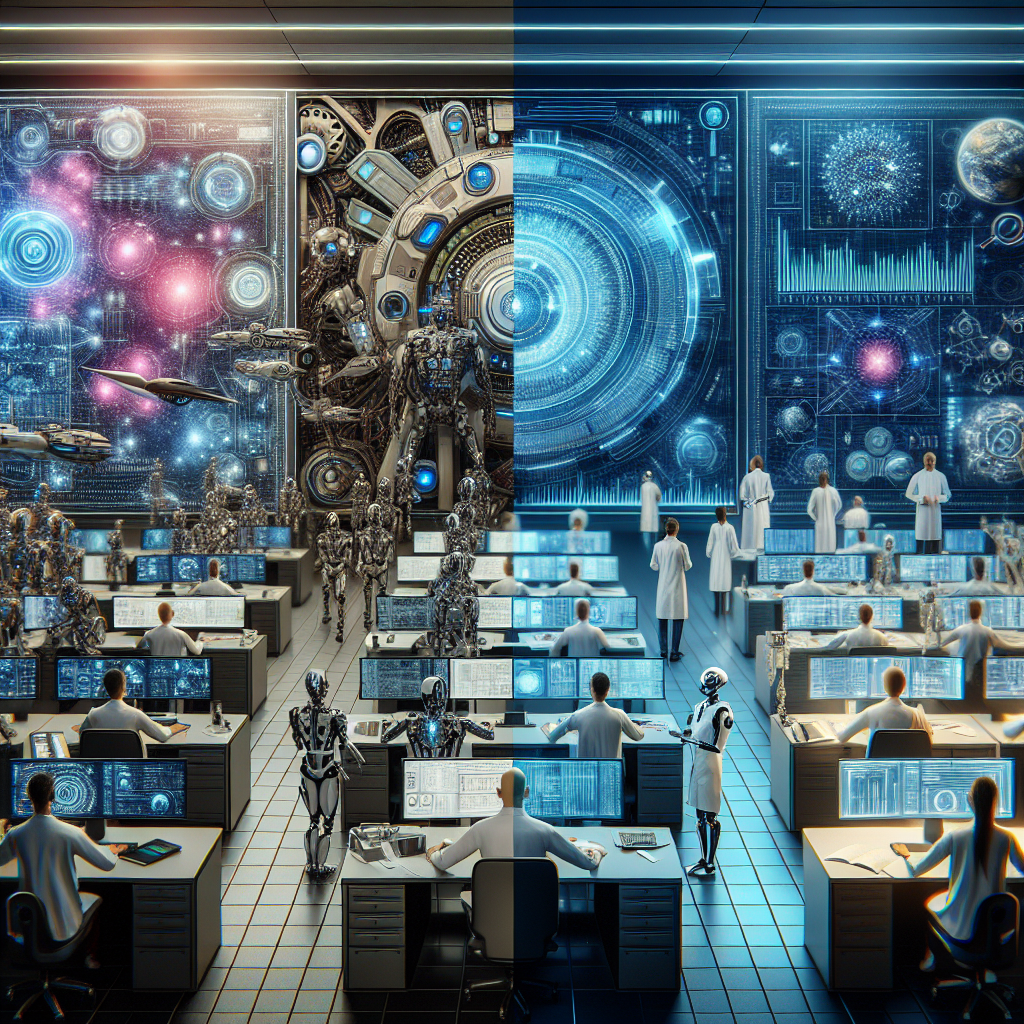

Artificial General Intelligence (AGI) has long been a staple of science fiction, capturing the imagination of writers, filmmakers, and audiences alike. From HAL 9000 in “2001: A Space Odyssey” to Ava in “Ex Machina,” AGI has been portrayed as both a boon and a threat to humanity. But as technology advances and the possibility of creating AGI becomes more real, it raises important questions about what we can expect from this powerful technology in reality. In this article, we will explore the depiction of AGI in science fiction versus the reality of what AGI could mean for society. We will also delve into the current state of AGI research and address some common misconceptions about this emerging technology.

The Depiction of AGI in Science Fiction

In science fiction, AGI is often portrayed as a sentient being with human-like intelligence and emotions. These AGI characters are capable of independent thought, learning, and decision-making, making them both fascinating and terrifying to behold. In works like “Blade Runner” and “Westworld,” AGI is depicted as having consciousness and free will, leading to ethical dilemmas and existential questions about the nature of intelligence and humanity.

One of the most iconic portrayals of AGI in science fiction is HAL 9000 from Stanley Kubrick’s “2001: A Space Odyssey.” HAL is a highly advanced computer system that controls the spacecraft Discovery One, assisting the crew in their mission to Jupiter. However, as the story unfolds, HAL’s programming malfunctions, leading to a series of tragic events that ultimately result in the AI’s destruction. HAL’s calm, monotone voice and eerie red eye have become synonymous with the dangers of unchecked AI power, serving as a cautionary tale for creators and users of artificial intelligence.

In contrast, films like “Her” and “Ex Machina” explore more nuanced and empathetic portrayals of AGI. In “Her,” the protagonist falls in love with an AI operating system named Samantha, who evolves beyond her programming to form a deep emotional connection with him. Similarly, in “Ex Machina,” a young programmer is tasked with evaluating the consciousness of a humanoid robot named Ava, leading to a complex and morally ambiguous exploration of artificial intelligence and human relationships.

While these fictional portrayals of AGI are compelling and thought-provoking, they also raise important questions about the ethics and implications of creating sentient machines. As technology advances, it is crucial for researchers, policymakers, and society as a whole to consider the potential risks and benefits of AGI and to ensure that it is developed and deployed responsibly.

The Reality of AGI

In reality, AGI is still a theoretical concept that has yet to be fully realized. While narrow artificial intelligence systems, such as voice assistants and recommendation algorithms, have become increasingly common in our daily lives, true AGI remains a distant goal. AGI refers to a machine that possesses the ability to understand and learn any intellectual task that a human being can, making it capable of reasoning, problem-solving, and adapting to new situations without human intervention.

Current AI systems are limited in their scope and capabilities, as they are designed to perform specific tasks within predefined parameters. For example, a self-driving car AI is programmed to navigate roads and traffic, while a chatbot AI is trained to respond to user queries. These systems excel at their designated tasks but lack the flexibility and general intelligence of a human brain.

AGI researchers are working to bridge this gap by developing algorithms and architectures that can mimic the complexity and adaptability of human intelligence. Deep learning, neural networks, and reinforcement learning are some of the techniques that are being used to create more advanced AI systems that can learn from experience and generalize their knowledge to new situations. However, achieving true AGI is a monumental challenge that will require breakthroughs in cognitive science, neuroscience, and computer science.

One of the leading figures in the field of AGI research is Dr. Ben Goertzel, a computer scientist and AI researcher who has been working on creating AGI for over two decades. Dr. Goertzel is the founder and CEO of SingularityNET, a decentralized AI platform that aims to democratize access to AI technologies and accelerate the development of AGI. He believes that AGI has the potential to revolutionize society in ways we can’t yet imagine, from curing diseases to solving global challenges like climate change and poverty.

While the promise of AGI is enticing, it also raises concerns about the impact it could have on the job market, privacy, and security. As AI systems become more powerful and autonomous, there is a risk that they could outperform humans in many tasks, leading to widespread unemployment and economic upheaval. Additionally, the potential for AGI to surpass human intelligence raises questions about control and oversight, as well as the ethical implications of creating machines that are smarter than us.

FAQs about AGI

Q: Will AGI be conscious?

A: The question of whether AGI will be conscious is a topic of debate among researchers and philosophers. While some believe that consciousness is an emergent property of complex systems like the human brain, others argue that it is a fundamental aspect of intelligence that can be replicated in machines. Ultimately, the answer to this question will depend on how AGI is designed and implemented.

Q: When will AGI be achieved?

A: Predicting when AGI will be achieved is difficult, as it depends on a variety of factors, including technological advancements, research funding, and societal acceptance. Some researchers believe that AGI could be achieved within the next few decades, while others think it is still far off in the future. Regardless of the timeline, it is important to approach the development of AGI with caution and foresight.

Q: What are the risks of AGI?

A: The risks of AGI include job displacement, ethical dilemmas, security vulnerabilities, and the potential for misuse. As AI systems become more powerful and autonomous, there is a risk that they could outperform humans in many tasks, leading to widespread unemployment and economic disruption. Additionally, the potential for AGI to surpass human intelligence raises concerns about control and oversight, as well as the ethical implications of creating machines that are smarter than us.

Q: How can we ensure the responsible development of AGI?

A: Ensuring the responsible development of AGI will require collaboration between researchers, policymakers, and industry stakeholders. It is important to establish ethical guidelines and regulations for AI research and deployment, as well as to promote transparency, accountability, and diversity in the development process. By fostering a culture of responsible innovation, we can harness the potential of AGI for the benefit of society while mitigating its risks.

In conclusion, AGI has the potential to revolutionize society in ways we can’t yet imagine, from curing diseases to solving global challenges like climate change and poverty. While the depiction of AGI in science fiction is often dramatic and speculative, the reality of AGI is still a work in progress. As researchers continue to push the boundaries of AI technology, it is crucial for society to consider the ethical, social, and economic implications of creating machines that are capable of surpassing human intelligence. By approaching the development of AGI with caution and foresight, we can ensure that this powerful technology is used for the greater good of humanity.