Artificial General Intelligence (AGI) is the holy grail of artificial intelligence research. AGI refers to a machine that possesses the ability to understand, learn, and apply knowledge in a way that rivals human intelligence. While narrow AI systems excel at specific tasks, such as image recognition or language translation, AGI aims to replicate the breadth and depth of human intelligence across a wide range of domains.

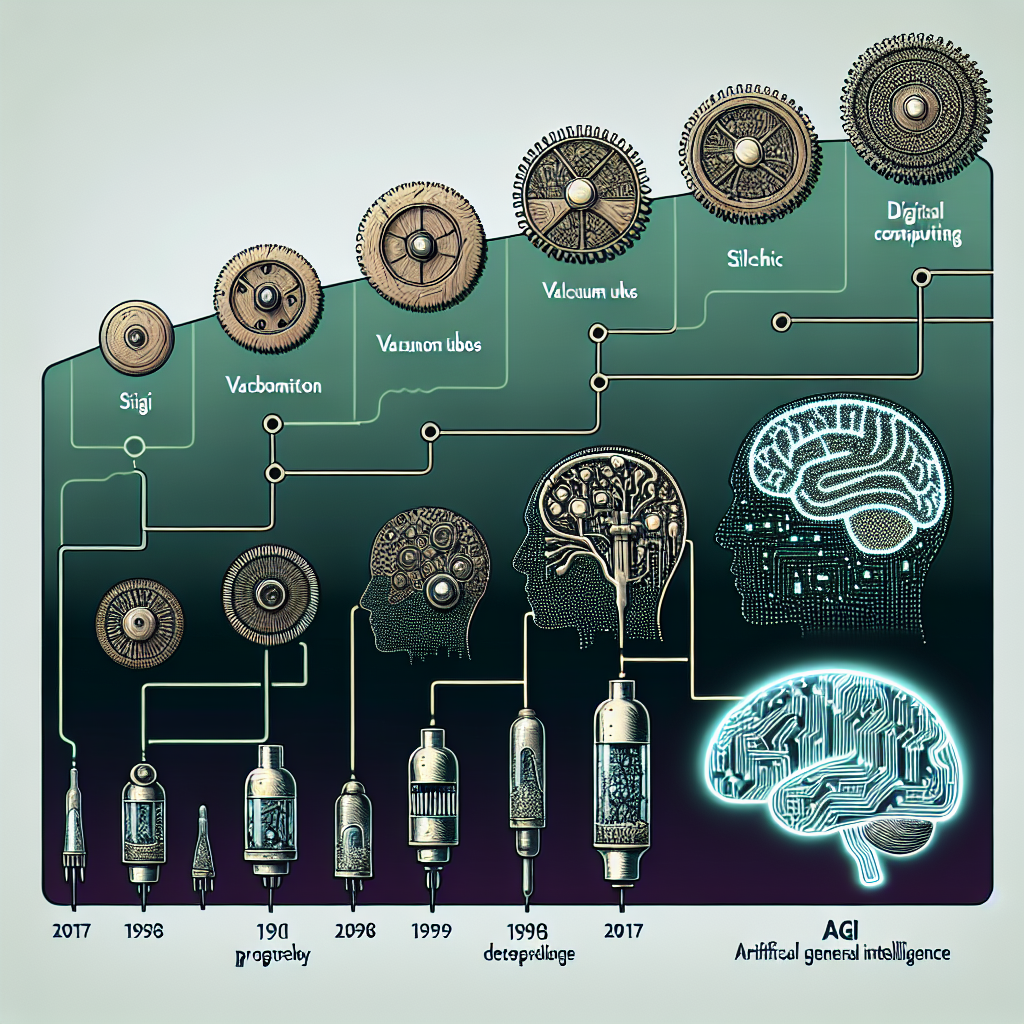

The concept of AGI has been around for decades, with researchers and scientists working tirelessly to develop systems that can truly think and reason like humans. In this article, we will explore the evolution of AGI, tracing its development over time and examining the challenges and breakthroughs that have shaped the field.

The Early Days of AI

The roots of AGI can be traced back to the early days of artificial intelligence research in the 1950s and 1960s. The pioneers of the field, such as Alan Turing and John McCarthy, laid the foundation for modern AI by developing theories and algorithms that could simulate human intelligence. Early AI systems were designed to perform specific tasks, such as playing chess or solving mathematical problems, but lacked the ability to generalize their knowledge to new situations.

One of the first attempts at creating a truly intelligent machine was the General Problem Solver (GPS), developed by Herbert Simon and Allen Newell in the late 1950s. GPS was a computer program that could solve a wide range of problems by applying a set of rules and heuristics. While revolutionary at the time, GPS was limited in its capabilities and could not adapt to new challenges without human intervention.

The Rise of Expert Systems

In the 1980s, a new approach to AI emerged with the development of expert systems. Expert systems were designed to mimic the decision-making processes of human experts in specific domains, such as medicine or finance. These systems relied on knowledge bases and rule-based reasoning to make decisions and solve problems.

One of the most famous expert systems was MYCIN, developed in the 1970s by Edward Shortliffe at Stanford University. MYCIN was a computer program that could diagnose and recommend treatment for bacterial infections, using a set of rules and heuristics based on expert medical knowledge. While MYCIN was successful in its domain, it was limited in its ability to learn and adapt to new situations.

The Neural Network Revolution

In the 1980s and 1990s, a new wave of AI research emerged with the development of neural networks. Neural networks are computational models inspired by the structure and function of the human brain, consisting of interconnected nodes that process and transmit information. Neural networks have the ability to learn from data and make predictions based on patterns and relationships.

One of the key breakthroughs in neural network research was the development of backpropagation, a learning algorithm that allows networks to adjust their weights and biases in response to errors. This enables neural networks to learn complex patterns and relationships in data, leading to advances in image recognition, speech recognition, and natural language processing.

The Deep Learning Revolution

In the early 2000s, a new era of AI research began with the rise of deep learning. Deep learning is a subfield of machine learning that uses neural networks with multiple layers (hence the term “deep”) to learn hierarchical representations of data. Deep learning has revolutionized AI by enabling machines to learn complex patterns and relationships in data, leading to breakthroughs in computer vision, natural language processing, and robotics.

One of the most famous examples of deep learning is AlphaGo, developed by DeepMind in 2016. AlphaGo is a computer program that can play the ancient game of Go at a superhuman level, defeating the world champion in a historic match. AlphaGo uses deep neural networks and reinforcement learning to learn and improve its gameplay over time, demonstrating the power of AI to master complex tasks.

The Quest for AGI

Despite the advances in AI research, achieving AGI remains a daunting challenge. While narrow AI systems excel at specific tasks, they lack the ability to generalize their knowledge and adapt to new situations. AGI requires machines to understand and reason about the world in a way that rivals human intelligence, a feat that has yet to be fully realized.

There are several key challenges facing the development of AGI, including:

1. Knowledge representation: AGI systems must be able to represent and understand knowledge in a way that is flexible and scalable. Current AI systems rely on fixed rules and heuristics, limiting their ability to learn and adapt to new situations.

2. Reasoning and inference: AGI systems must be able to reason and make inferences about the world, using logic and probabilistic reasoning to reach conclusions. Current AI systems struggle with complex reasoning tasks that require abstraction and generalization.

3. Learning and adaptation: AGI systems must be able to learn from experience and adapt to new challenges, using feedback and reinforcement to improve their performance over time. Current AI systems often require large amounts of labeled data and manual tuning to achieve optimal performance.

4. Ethical and societal implications: The development of AGI raises important ethical and societal questions, such as the impact on jobs, privacy, and security. AGI systems must be designed and implemented in a responsible and transparent manner to ensure their safe and beneficial use.

Despite these challenges, researchers and scientists continue to push the boundaries of AI research in pursuit of AGI. Breakthroughs in deep learning, reinforcement learning, and cognitive science have brought us closer to achieving AGI than ever before. While the timeline for AGI remains uncertain, the potential benefits and risks of this technology are clear, making it a topic of intense interest and debate.

FAQs

Q: What is the difference between AGI and narrow AI?

A: AGI refers to a machine that possesses the ability to understand, learn, and apply knowledge in a way that rivals human intelligence across a wide range of domains. Narrow AI, on the other hand, refers to systems that excel at specific tasks, such as image recognition or language translation, but lack the ability to generalize their knowledge to new situations.

Q: When will AGI be achieved?

A: The timeline for achieving AGI remains uncertain, with estimates ranging from a few decades to a century or more. While significant progress has been made in AI research, the challenges of knowledge representation, reasoning, learning, and adaptation are still formidable obstacles to overcome.

Q: What are the ethical implications of AGI?

A: The development of AGI raises important ethical and societal questions, such as the impact on jobs, privacy, and security. AGI systems must be designed and implemented in a responsible and transparent manner to ensure their safe and beneficial use.

Q: How can we ensure the safe and beneficial use of AGI?

A: To ensure the safe and beneficial use of AGI, researchers and policymakers must collaborate to develop ethical guidelines and regulations for its development and deployment. Transparency, accountability, and oversight are essential to address the risks and challenges of AGI.

In conclusion, the evolution of AGI is a fascinating journey that has spanned decades of research and innovation. While the quest for AGI remains a daunting challenge, the breakthroughs and advancements in AI research have brought us closer than ever to achieving this ultimate goal of artificial intelligence. With continued dedication and collaboration, the dream of creating machines that can think and reason like humans may soon become a reality.