The Evolution of Generative AI in Speech Synthesis

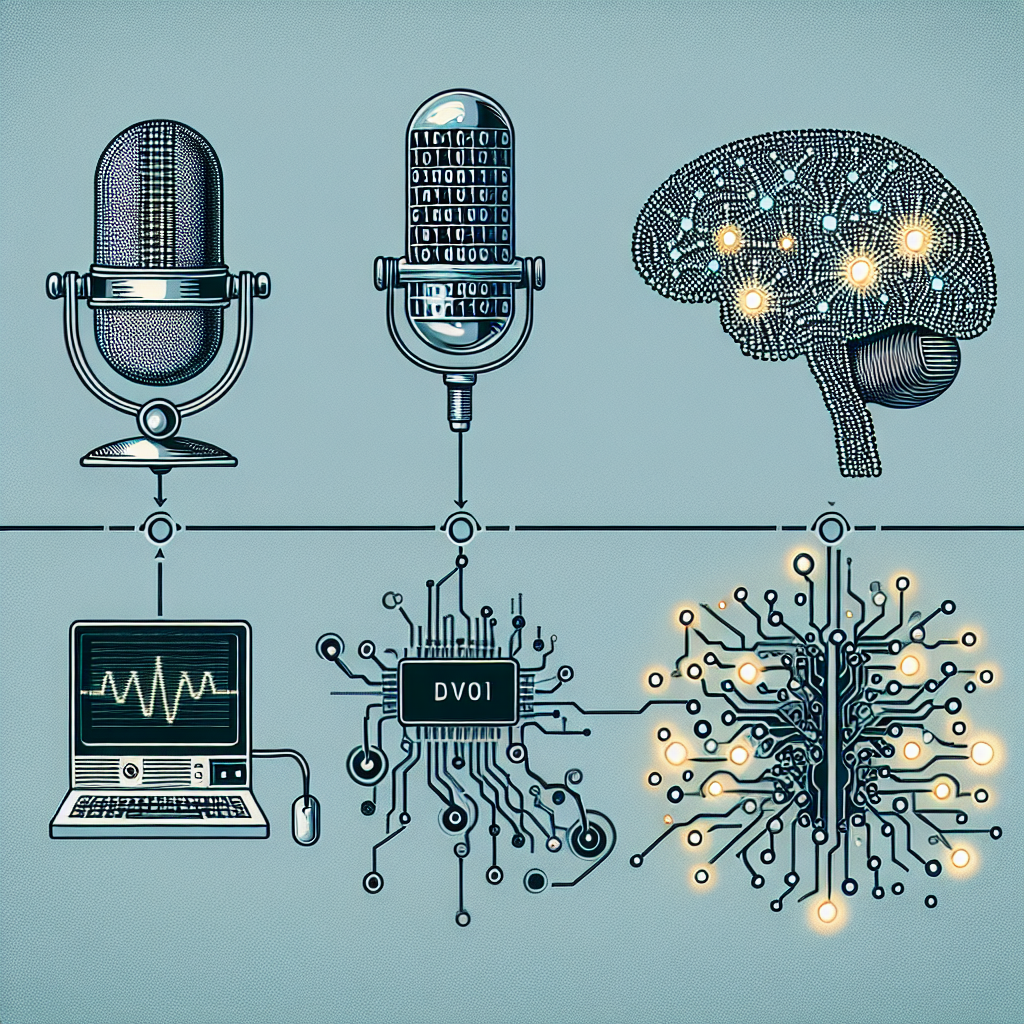

Speech synthesis, the artificial production of human speech, has come a long way since its inception. With advancements in technology and the rise of generative AI, speech synthesis has become more realistic and natural-sounding than ever before. Generative AI, a subset of artificial intelligence that focuses on creating new data rather than just analyzing existing data, has revolutionized speech synthesis by allowing machines to generate speech that closely mimics human speech patterns and intonations.

In this article, we will explore the evolution of generative AI in speech synthesis, from its humble beginnings to the cutting-edge technologies being developed today. We will also delve into the advantages and challenges of using generative AI in speech synthesis, as well as some frequently asked questions about this exciting field.

The Early Days of Speech Synthesis

Speech synthesis dates back to the 18th century, when inventors began experimenting with mechanical devices that could produce speech-like sounds. One of the earliest examples of speech synthesis technology was the “acoustic resonator,” a device created by Wolfgang von Kempelen in 1791 that could produce vowel sounds using a series of tubes and bellows.

In the 20th century, researchers began to develop electronic speech synthesis systems that used recorded human speech as a basis for generating synthetic speech. These early systems were limited in their capabilities and often produced robotic and unnatural-sounding speech. However, they laid the foundation for the development of more advanced speech synthesis technologies in the years to come.

The Rise of Generative AI in Speech Synthesis

Generative AI has revolutionized the field of speech synthesis by enabling machines to generate speech that sounds more natural and human-like than ever before. Unlike traditional speech synthesis systems, which rely on pre-recorded speech samples and rules-based algorithms, generative AI models are trained on large datasets of human speech to learn the underlying patterns and structures of natural language.

One of the key advancements in generative AI for speech synthesis was the development of deep learning models, such as recurrent neural networks (RNNs) and convolutional neural networks (CNNs), which are capable of learning complex patterns in speech data and generating highly realistic speech output. These models have been used to create state-of-the-art speech synthesis systems that can produce speech that is virtually indistinguishable from human speech.

Recent Advances in Generative AI Speech Synthesis

In recent years, researchers have made significant strides in improving the quality and naturalness of generative AI speech synthesis systems. One of the most notable advances has been the development of WaveNet, a deep learning model created by Google’s DeepMind team that uses a neural network to generate raw audio waveforms directly, rather than relying on pre-recorded speech samples.

WaveNet has been shown to produce speech that is more natural and realistic than previous speech synthesis systems, thanks to its ability to capture subtle nuances in speech patterns such as intonation, pitch, and timbre. This has led to a significant improvement in the quality of synthetic speech generated by WaveNet, making it one of the most advanced speech synthesis systems available today.

Another major breakthrough in generative AI speech synthesis is the development of Tacotron and its successor Tacotron 2, created by researchers at Google. Tacotron is a sequence-to-sequence model that generates speech directly from text inputs, allowing for more flexible and adaptable speech synthesis. Tacotron 2 builds upon this framework by incorporating a WaveNet-based vocoder to improve the quality and naturalness of the generated speech.

Advantages of Generative AI in Speech Synthesis

Generative AI has several advantages over traditional speech synthesis systems, including:

1. Improved Naturalness: Generative AI models are capable of capturing the subtle nuances and complexities of human speech, resulting in synthetic speech that sounds more natural and human-like.

2. Increased Flexibility: Generative AI models can generate speech from text inputs, allowing for more flexible and adaptable speech synthesis. This enables users to customize the speech output to suit their specific needs and preferences.

3. Enhanced Quality: Generative AI models such as WaveNet and Tacotron 2 have significantly improved the quality of synthetic speech, making it virtually indistinguishable from human speech in many cases.

Challenges of Generative AI in Speech Synthesis

Despite its many advantages, generative AI speech synthesis also faces several challenges, including:

1. Data Requirements: Generative AI models require large datasets of human speech to be trained effectively, which can be time-consuming and resource-intensive.

2. Robustness: Generative AI models are susceptible to errors and inaccuracies, which can result in synthetic speech that is unnatural or unintelligible.

3. Ethical Concerns: The use of generative AI in speech synthesis raises ethical concerns related to privacy, identity theft, and the potential misuse of synthetic speech for malicious purposes.

Frequently Asked Questions

Q: How does generative AI differ from traditional speech synthesis techniques?

A: Generative AI uses deep learning models to generate speech that closely mimics human speech patterns and intonations, whereas traditional speech synthesis techniques rely on pre-recorded speech samples and rules-based algorithms.

Q: What are some of the applications of generative AI in speech synthesis?

A: Generative AI speech synthesis has a wide range of applications, including virtual assistants, voice-activated devices, language translation, and accessibility tools for individuals with speech impairments.

Q: How can generative AI improve the accessibility of speech synthesis technology?

A: Generative AI models such as WaveNet and Tacotron 2 have significantly improved the quality and naturalness of synthetic speech, making it more accessible and user-friendly for individuals with speech impairments or disabilities.

Q: What are some of the limitations of generative AI in speech synthesis?

A: Generative AI speech synthesis systems require large datasets of human speech to be trained effectively, and they are susceptible to errors and inaccuracies that can affect the quality of the synthetic speech output.

In conclusion, the evolution of generative AI in speech synthesis has transformed the field of artificial speech production, enabling machines to generate speech that is more natural and human-like than ever before. With advancements in deep learning models such as WaveNet and Tacotron 2, the quality and naturalness of synthetic speech have reached new heights, paving the way for a future where artificial speech is indistinguishable from human speech. As researchers continue to push the boundaries of generative AI in speech synthesis, we can expect to see even more exciting developments in this field in the years to come.